The rise of artificial intelligence (AI) has opened new avenues for improving efficiency and innovation across industries. In user experience (UX) design, AI tools offer significant potential to transform traditional methods by streamlining processes such as user discovery, ideation, prototyping, usability testing, and data analysis1, 5, 7, 8. AI tools can reduce time and cost barriers associated with traditional user-centered design methods 2, 4 and facilitate collaboration among UX professionals 6.

While prior research has demonstrated AI’s capacity to assist designers, the direct impact of AI-driven design on the end-user experience remains underexplored.

Evaluate the effectiveness of AI-driven design from the user experience perspective. Assess whether AI integration improves task efficiency, ease of use, and overall satisfaction with app interfaces.

During my own job search, I experienced firsthand the overwhelming and time-consuming nature of the application process. Customizing resumes, writing tailored cover letters, and navigating inefficient platforms often felt frustrating and discouraging. These challenges inspired me to design an app that streamlines the job application process, helping users save time, stay organized, and feel more confident in their efforts. By addressing these common pain points, I aimed to create a tool that empowers job seekers to focus on showcasing their skills rather than battling application hurdles.

I analyzed several Reddit posts to understand how people apply for jobs and their frustrations with the process9. This approach allowed me to quickly gather diverse insights from a broad range of experiences. Key takeaways included:

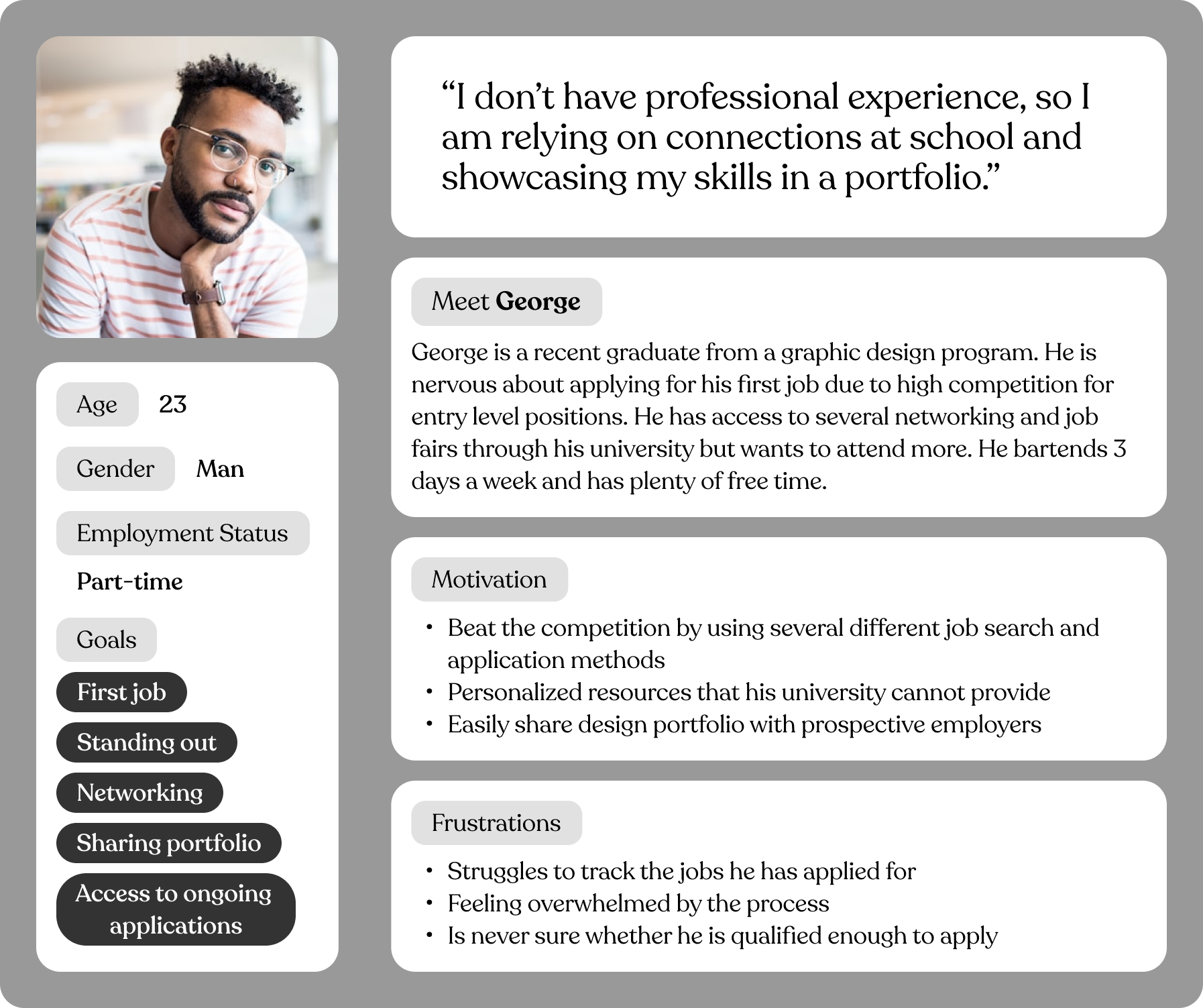

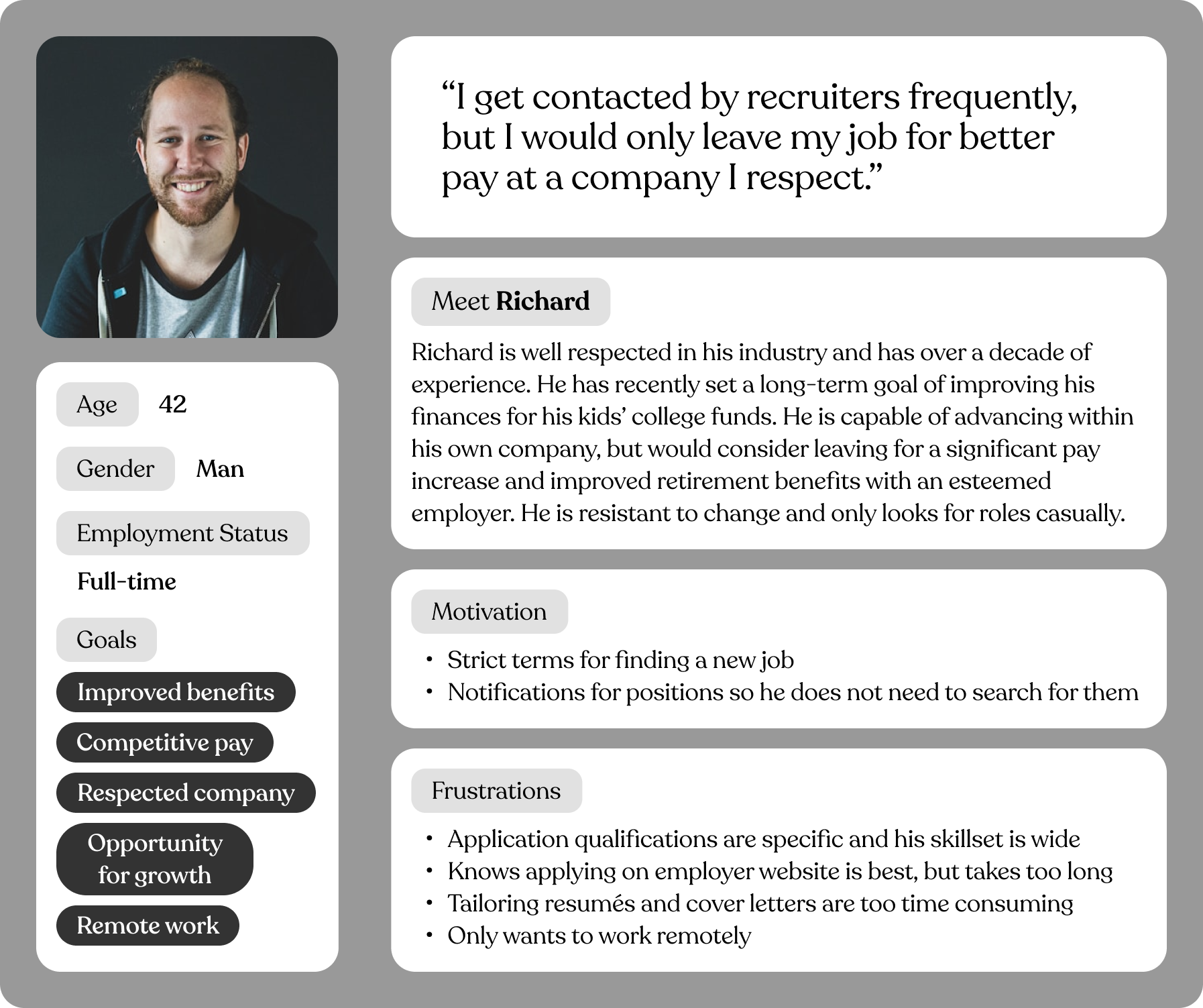

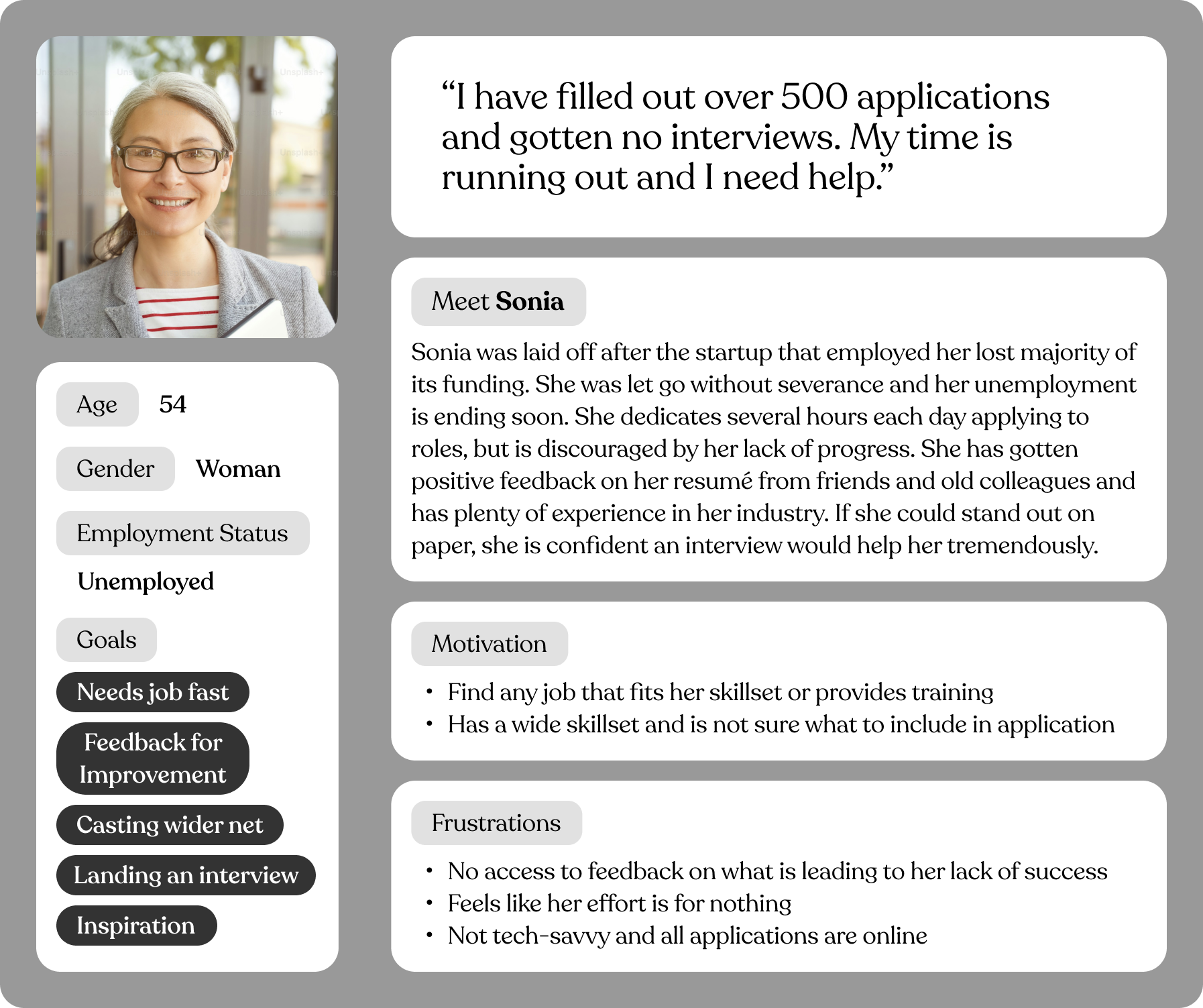

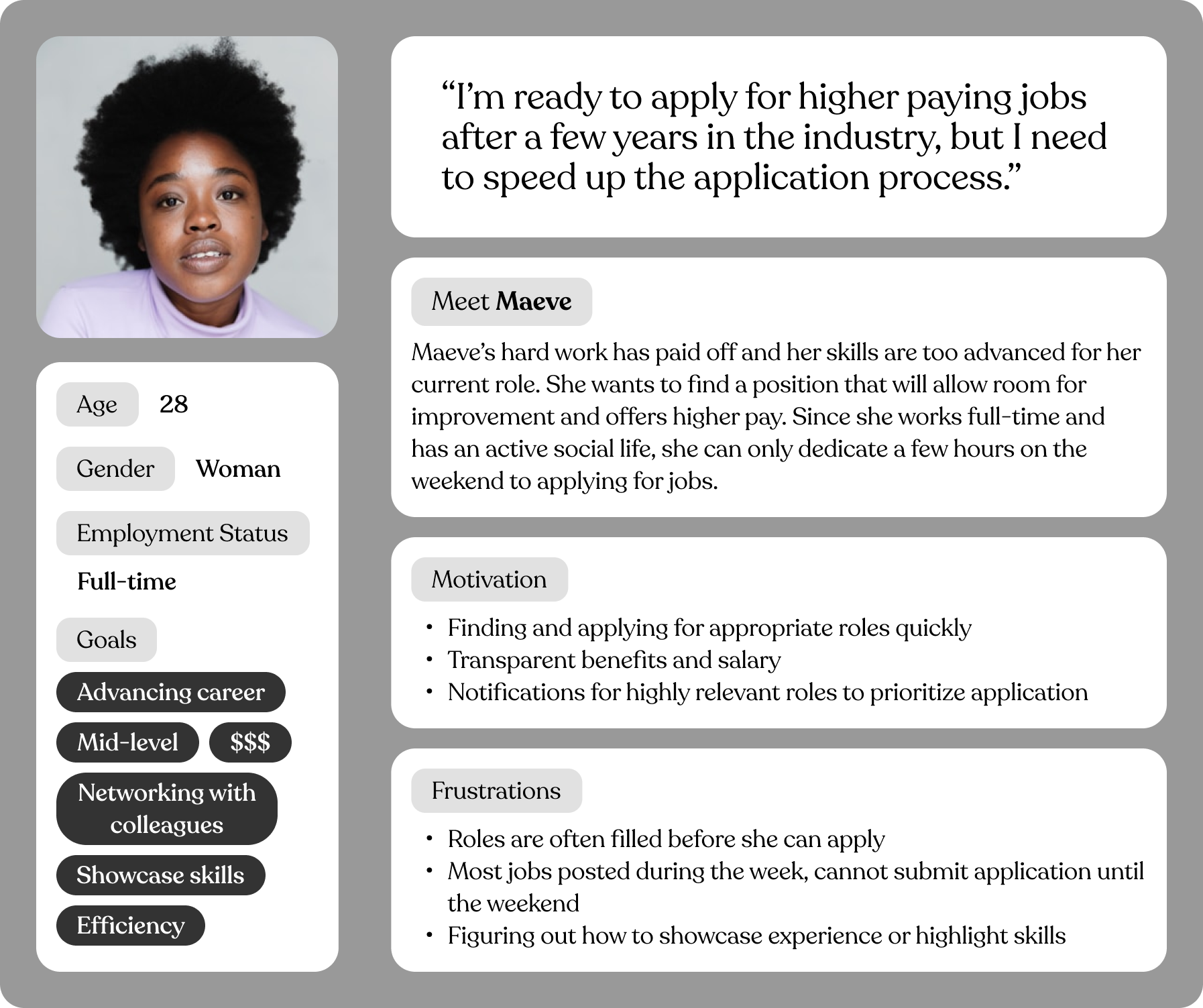

The insights gathered from Reddit user research informed the development of personas that represent the primary user groups. These personas encapsulate the frustrations, goals, and behaviors of users, enabling a more targeted design approach that addresses their specific needs and pain points.

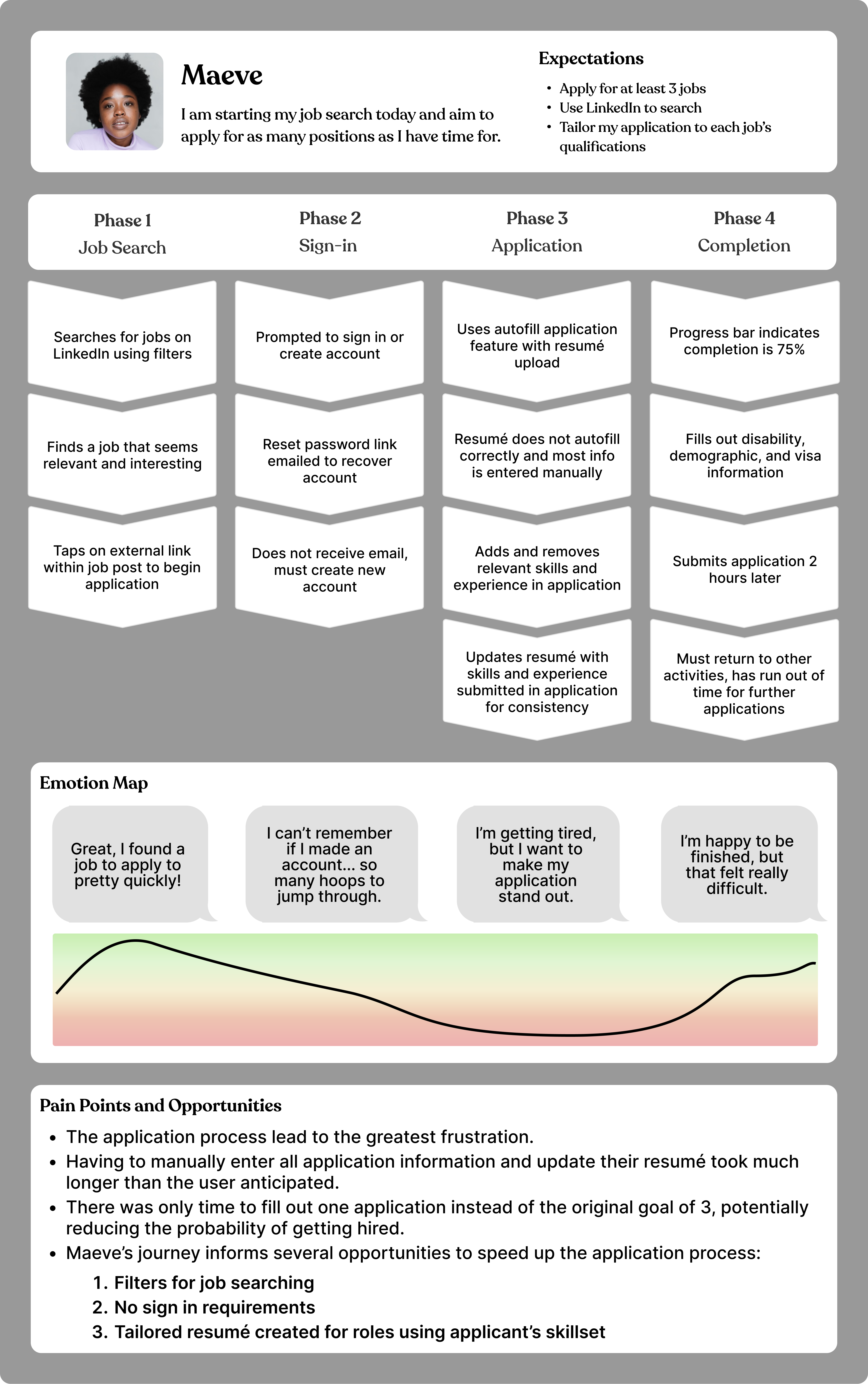

A user journey for Maeve was designed following best practices outlined by Nielsen Norman Group to ensure it effectively guided the prototype’s development. Journey mapping is a powerful tool for understanding a user’s experience, uncovering pain points, and identifying opportunities for improvement 3. By visualizing Maeve’s process step by step, the journey map provided a clear narrative of her frustrations, emotions, and goals to inform targeted design solutions.

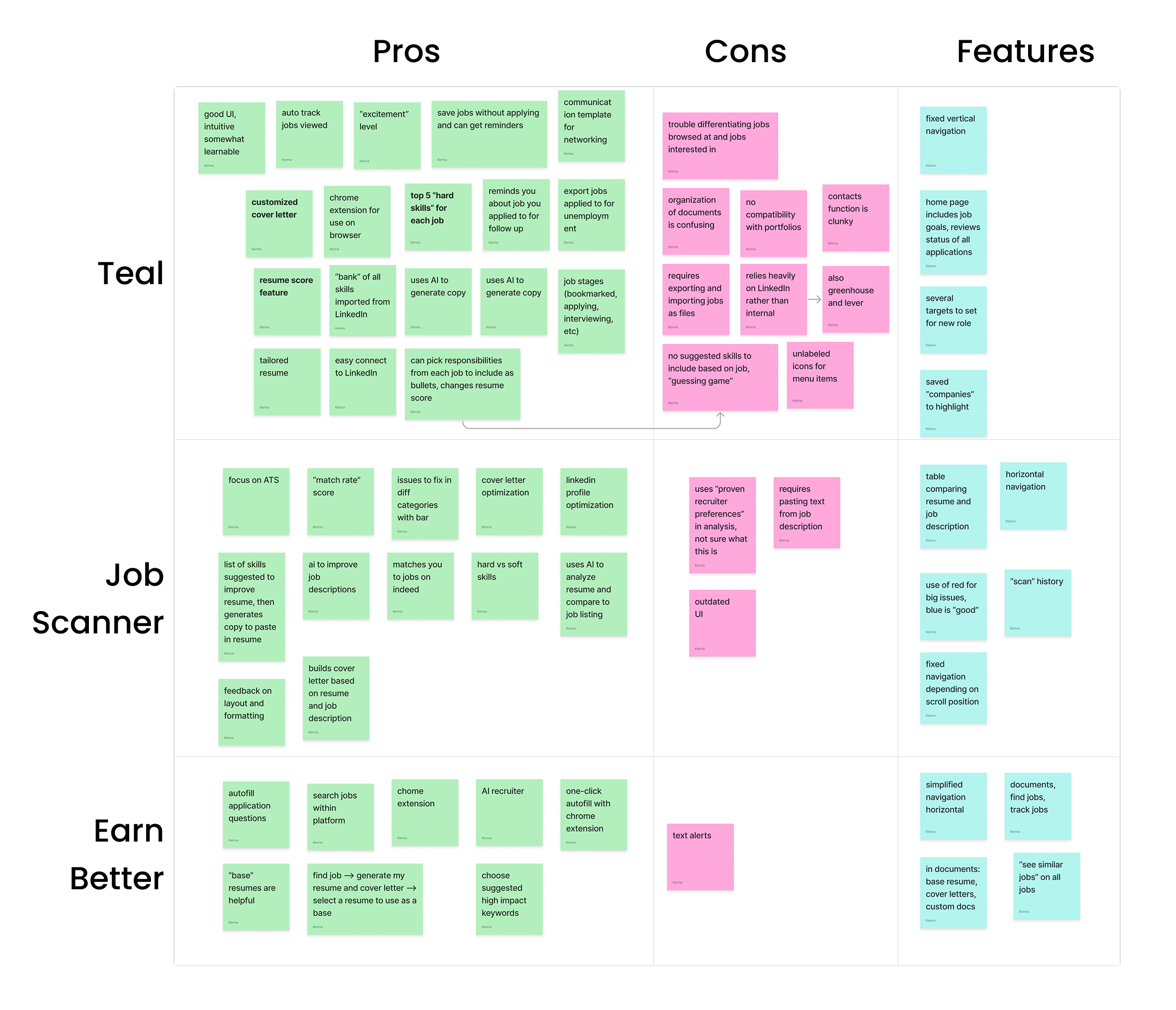

Through my research of blog posts, I identified three job search apps, Teal, JobScanner, and EarnBetter, for competitive analysis. I evaluated these platforms by performing key user tasks: registration, job searching, and job application. This analysis revealed several important features to prioritize for a positive user experience in my design:

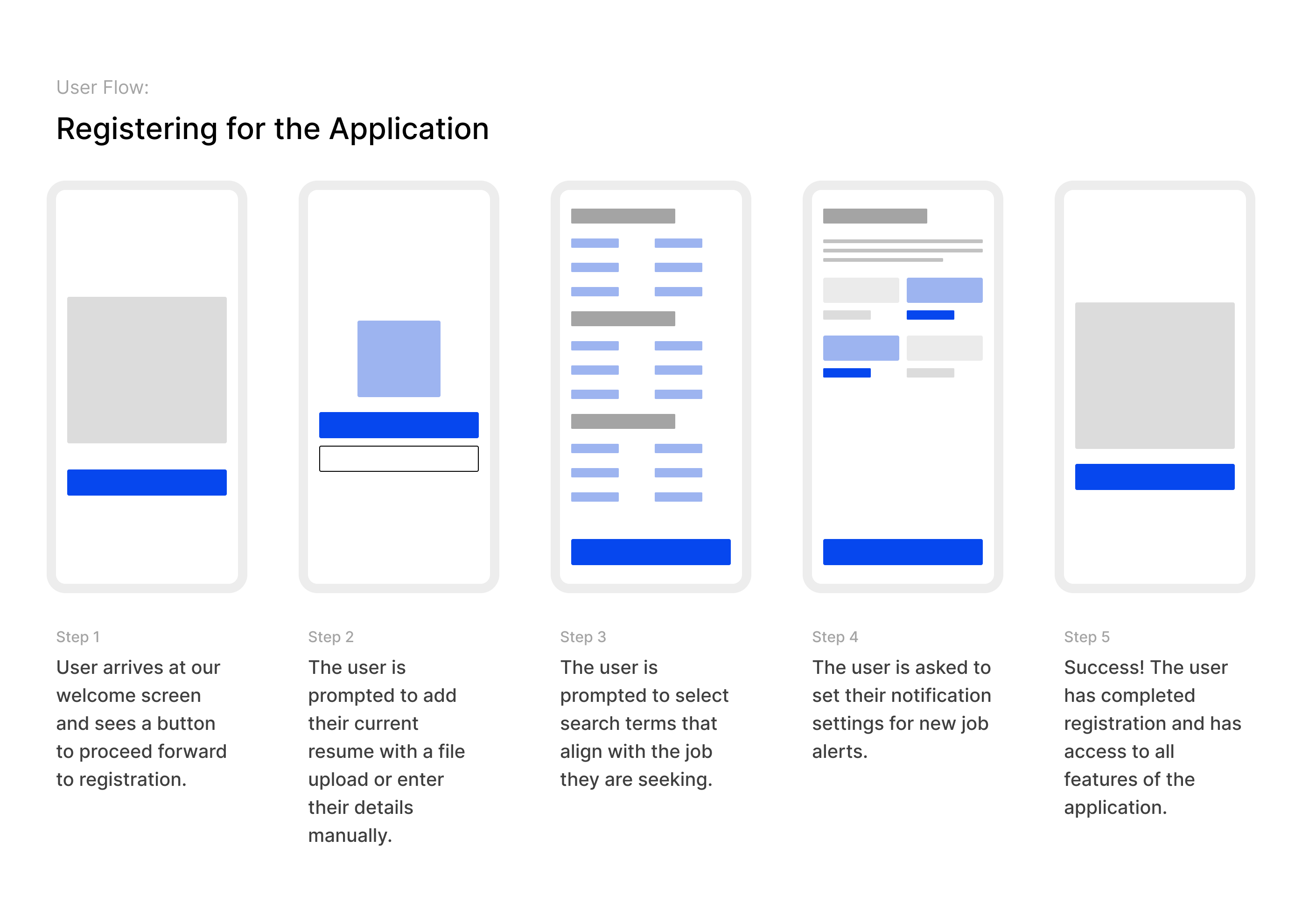

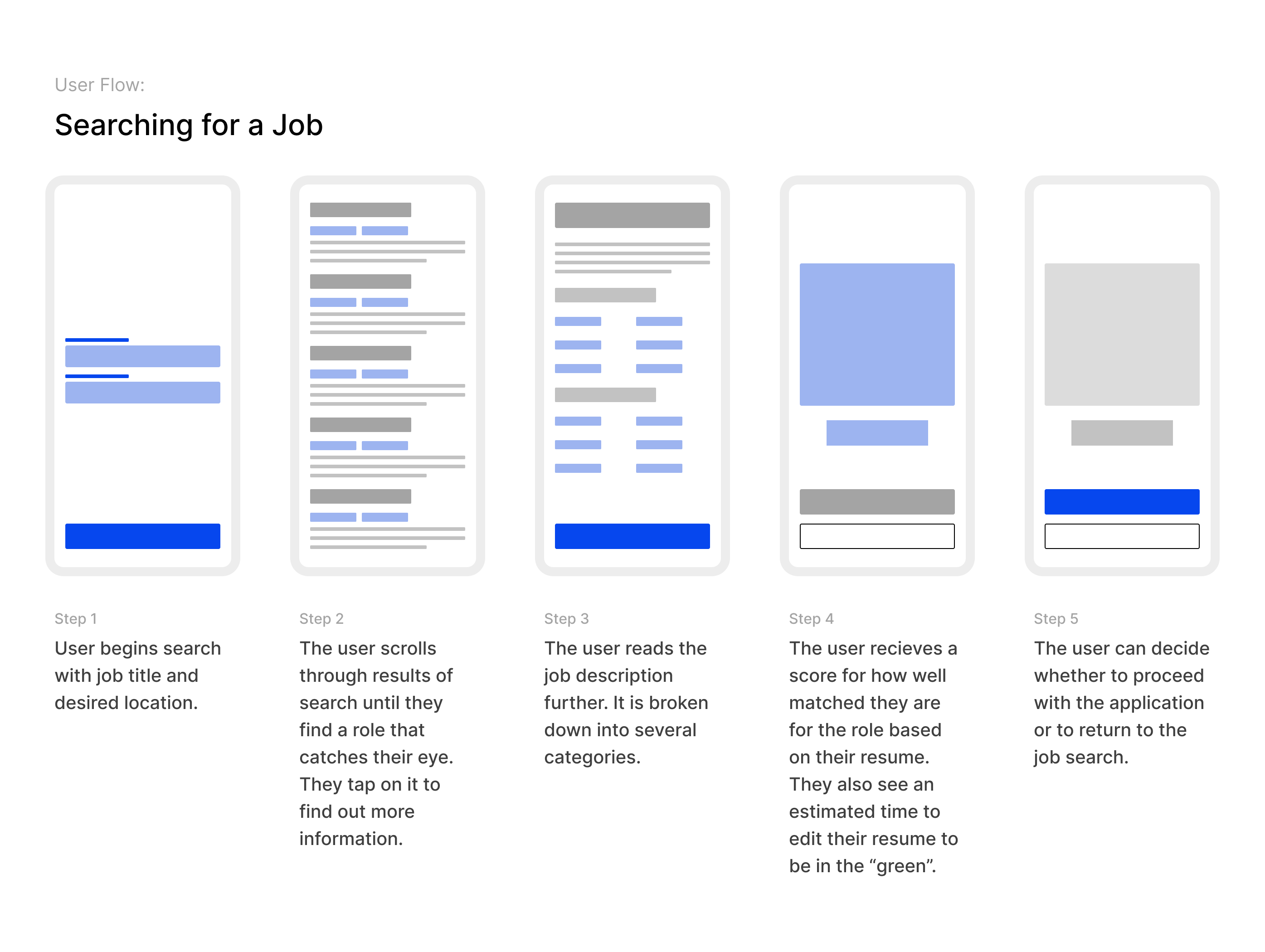

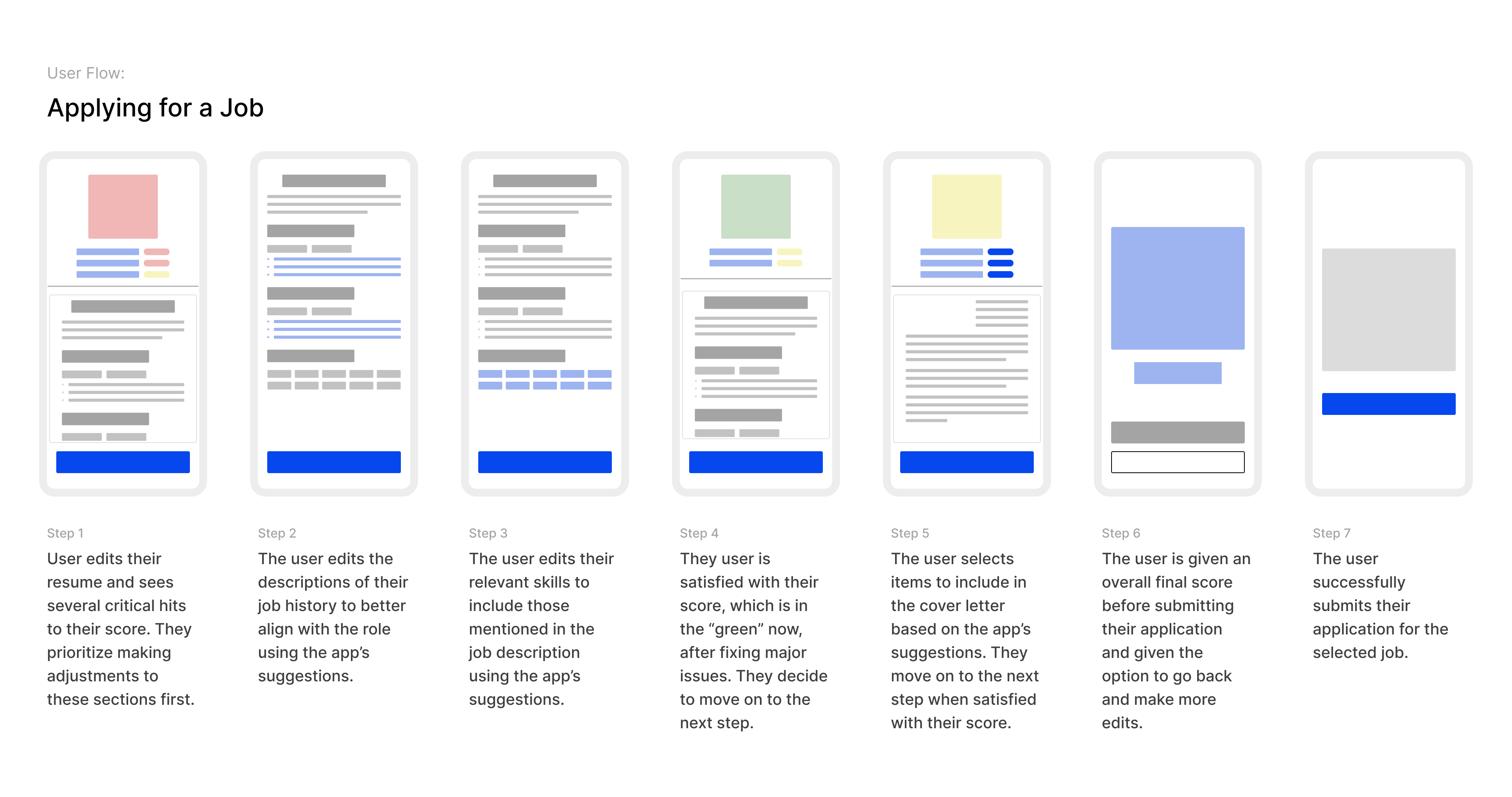

User flows were instrumental in this project as they provided a structured way to visualize user journeys and identify key points for improvement. By outlining each step a user takes, I was able to anticipate potential challenges and ensure that design decisions aligned with user needs. This guided the development of efficient, intuitive prototypes that improved task clarity and minimized friction in key processes.

The registration flow streamlines account creation by offering both manual entry and resume upload options. Uploading a resume allows the system to auto-fill details, reducing manual input. Users can customize job alerts and preferences, improving personalization while maintaining a structured experience.

The job search flow supports intuitive exploration of opportunities by allowing users to apply structured filters or explore listings freely. A dynamic match score evaluates the alignment between the user's resume and the job posting, offering insights into areas for improvement. This guided yet flexible approach enhances the job search experience.

This flow assists users in refining their resumes and improving their job match score. Users can modify job descriptions and skill sections based on system recommendations to better align with desired roles. Real-time feedback and visual indicators highlight changes, ensuring users can track improvements easily.

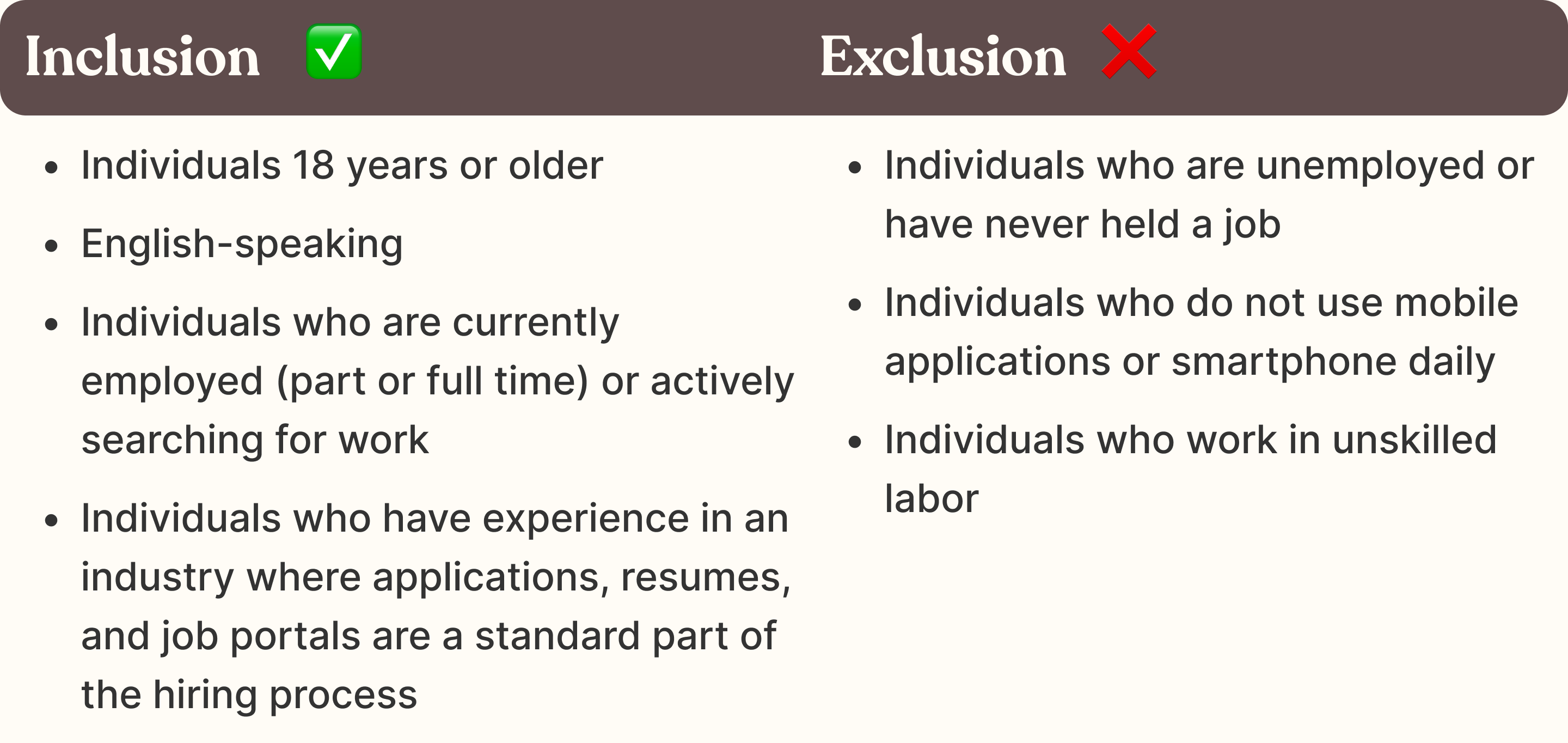

6 Participants were recruited through UserTesting and screened for experience with job applications and digital tools. 1 participant was recruited with convenience sampling for a pilot test.

Participants completed equivalent tasks in two prototypes (human-designed vs AI-assisted). Counterbalancing alternated which prototype the participant used first. Performance, satisfaction, and preferences were measured through Likert-scale questions and user feedback.

The human-designed prototype addresses common job seeker frustrations by emphasizing clarity, guidance, and user control. The registration flow minimizes manual entry by offering both resume uploads for data extraction and manual input options.

Users can customize job preferences and alerts to manage notifications efficiently. The job search flow combines structured filters with flexible browsing, while a dynamic job match score helps users assess their resume's alignment with listings. The application flow provides real-time resume feedback, suggested improvements, and a clear progress tracker to support users in refining their materials efficiently.

AI was given full creative liberty in the development of its job application prototype. ChatGPT (model 4o) was used exclusively to generate realistic user personas and journey maps, ensuring the app addressed real frustrations like career transitions, resume gaps, and low confidence. It synthesized user research into actionable insights, which shaped key features such as the resume score and an AI cover letter builder.

During the design phase, AI assisted with writing supportive UI copy, creating example responses, and suggesting inclusive interaction patterns. It generated clean, component-based React code using Tailwind CSS that I could easily replicate in Figma, accelerating the prototyping process with rapid iteration. By bridging user empathy with frontend development, AI made it easy to test ideas quickly, stay aligned with user goals, and maintain consistency across the experience.

With the Usabilitytesting.com platform, participant's screens were recorded and front-facing cameras turned on. Usability and efficiency were compared across tasks, supported by post-task and post-test Likert-scale surveys measuring satisfaction and ease of use. Open-ended responses and user interactions were analyzed to explore preferences and the influence of AI-generated design elements on engagement.

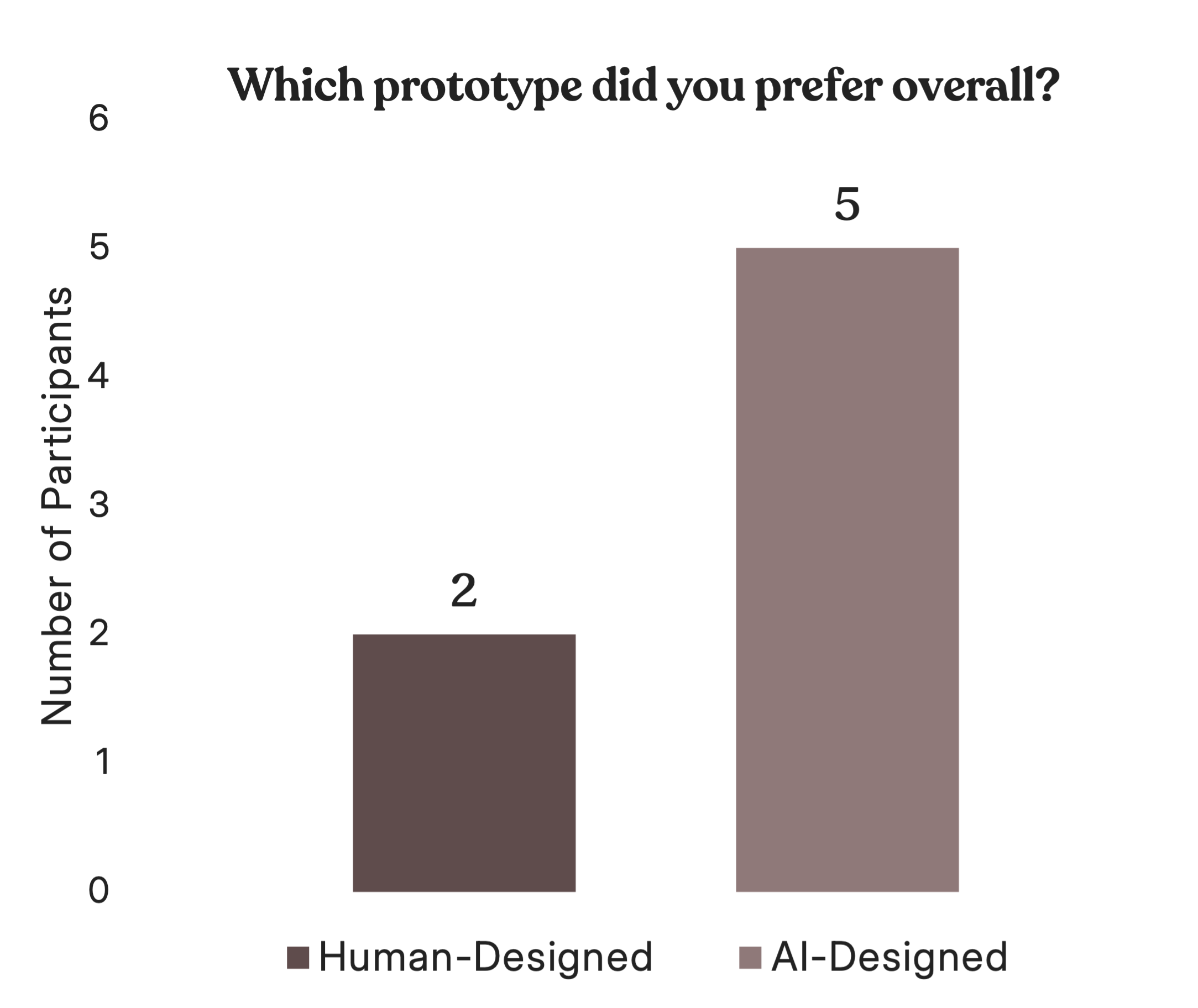

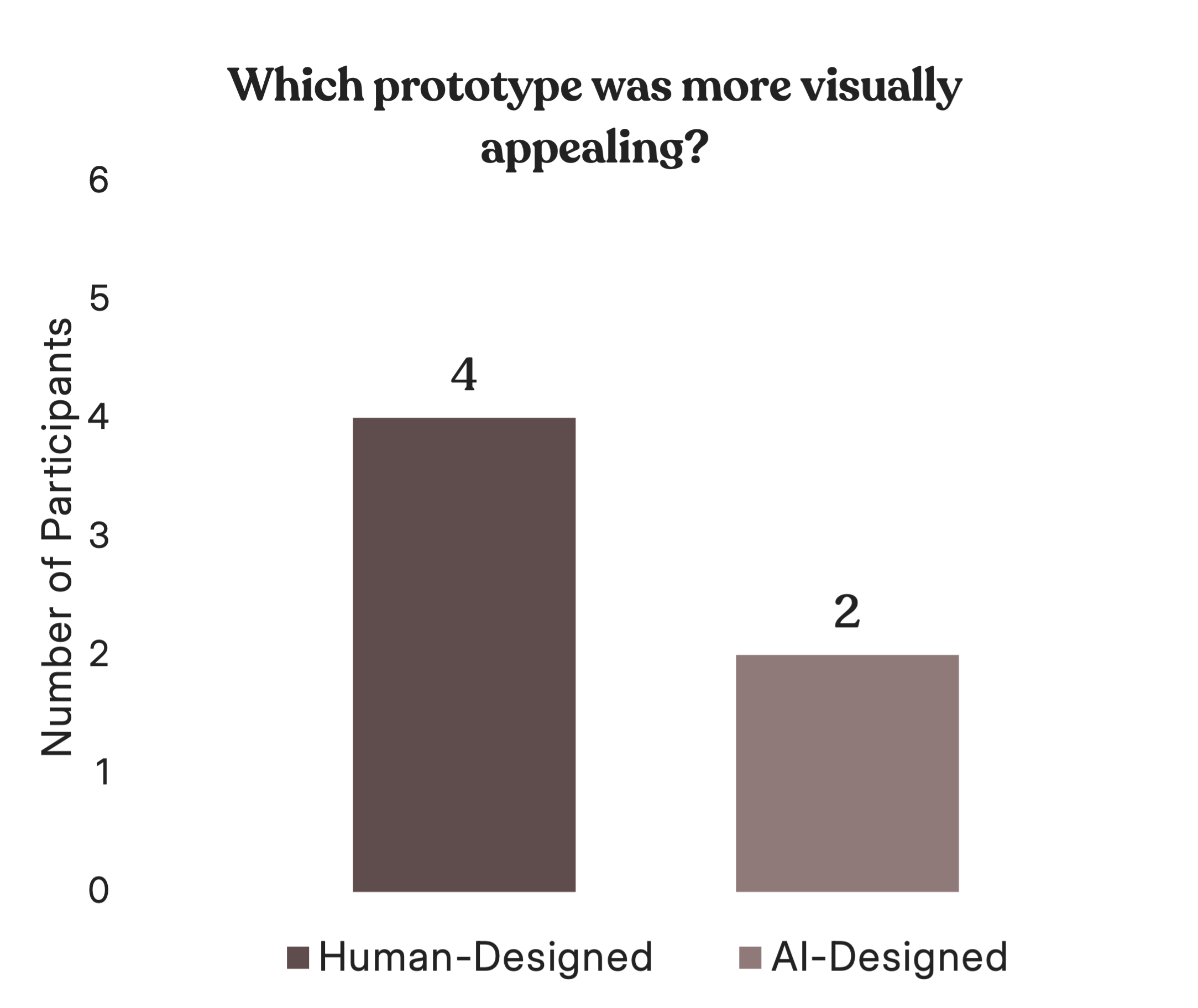

*Participants were asked to choose prototype "A" or "B" and shown a screenshot of both

Participant transcripts were manually coded to identify usability, satisfaction, and feature themes. Comments were categorized by interface areas such as navigation and visual design. Using affinity mapping in FigJam, I clustered feedback by theme and sentiment, allowing patterns, confusion points, and strengths to emerge while preserving individual user perspectives.

Seven digitally fluent professionals (mostly tech industry, US and Canada), all male, evaluated both prototypes. Participants were heavy daily mobile users with strong tech comfort.

"It was weird that I had to hunt for the cover letter option. I almost missed it."

"The AI one made finding jobs super fast. I was done in seconds.”

"I loved how simple the resume upload was, there were only a few steps."

"The AI cover letter saved me so much time, I would actually use this.”

"The match score gauge made [the Human-Designed Prototype] way more appealing. The ideal app would feature [the match score] in the [AI-designed Prototype]"

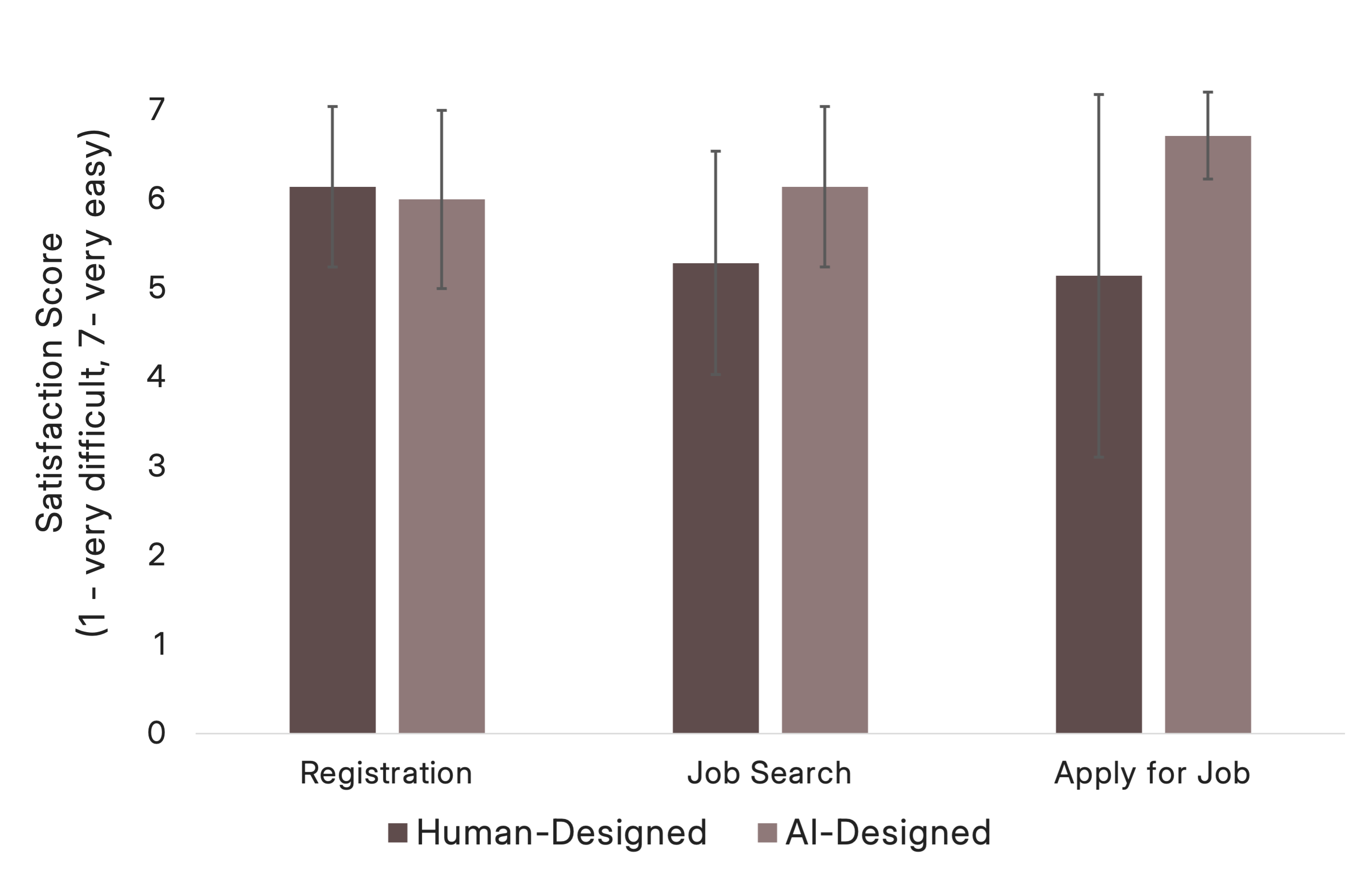

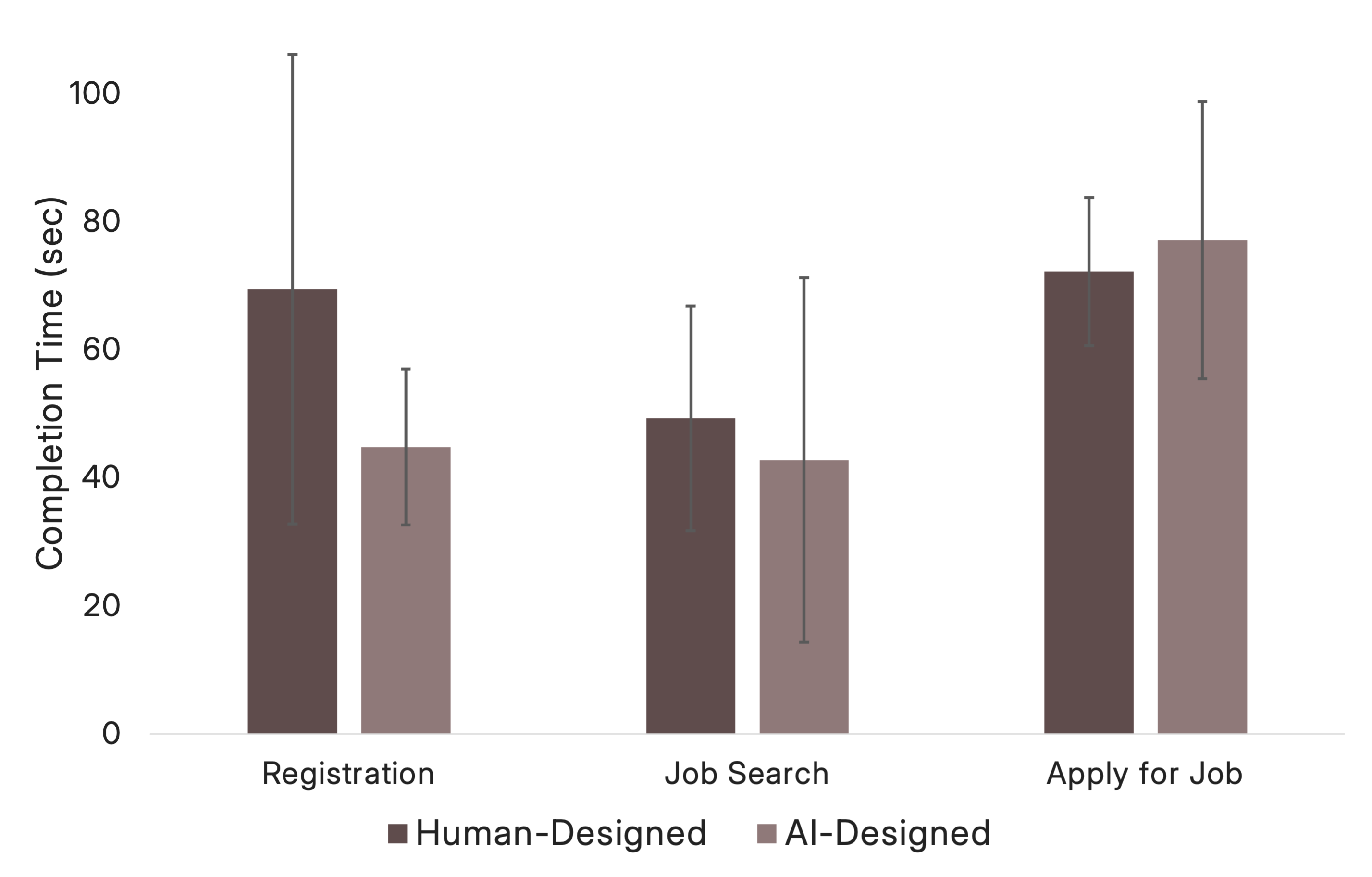

Participants completed three core tasks across both prototypes and rated their experience using a 7-point Likert scale. Satisfaction scores were averaged by task to compare ease of use. Task completion times were recorded automatically and manually adjusted to account for verbal feedback, ensuring accurate comparisons of efficiency between the Human-Designed and AI-Designed prototypes.

Average user satisfaction score across prototypes.

Error bars represent standard deviation

Average task completion time across prototypes.

Error bars represent standard deviation

This study demonstrated the strong potential of AI as a design tool, with tool being the key word. While the AI-generated prototype received positive feedback, it was not the clear favorite in all categories. Participants appreciated the depth of human-centered thinking behind features like the resume editor and dynamic job match score, which offered a stronger sense of progress and clarity. Meanwhile, ChatGPT overlooked key functionality, such as effective job search filters, and introduced color schemes that occasionally confused users.

However, AI excelled at producing a clean, intuitive layout with strong information hierarchy. Users noted the ease of scanning and task flow, reinforcing the insight that AI generates breadth (a wide range of layouts), while humans provide depth (strategic, empathetic design thinking).

While this research isolated human and AI contributions, real-world design involves collaboration between AI and designers. The most positively received AI elements were likely the result of careful prompting that aligned outputs with user needs. This suggests that even minimal human intervention can significantly enhance AI-driven design.

Next semester, I will explore best practices for prompting AI, refining its outputs, understanding model limitations, and studying how designers are integrating AI tools into real workflows today.

1Biplab Deka, Zifeng Huang, Chad Franzen, Joshua Hibschman, Daniel Afergan, Yang Li, Jeffrey Nichols, and Ranjitha Kumar. 2017. Rico: A Mobile App Dataset for Building Data-Driven Design Applications. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology (UIST '17). Association for Computing Machinery, New York, NY, USA, 845–854.

2Mingming Fan, Serina Shi, and Khai N Truong. 2020. Practices and Challenges of Using Think-Aloud Protocols in Industry: An International Survey. Journal of Usability Studies 15, 2 (2020), 85–102.

3Sarah Gibbons. 2018. Journey Mapping 101. Nielsen Norman Group. Retrieved from https://www.nngroup.com/articles/journey-mapping-101/.

4Jonathan Lazar, Jinjuan Heidi Feng, and Harry Hochheiser. 2017. Research Methods in Human-Computer Interaction (Second Edition). Wiley, Hoboken, NJ.

5Emily Kuang, Minghao Li, Mingming Fan, and Kristen Shinohara. 2024. Enhancing UX Evaluation Through Collaboration with Conversational AI Assistants: Effects of Proactive Dialogue and Timing. In Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI ’24). ACM, New York, NY, USA, 16 pages.

6Emily Kuang, Xiaofu Jin, and Mingming Fan. 2022. “Merging Results Is No Easy Task”: An International Survey Study of Collaborative Data Analysis Practices Among UX Practitioners. In CHI Conference on Human Factors in Computing Systems (CHI ’22). ACM, New York, NY, USA, 16 pages.

7Savvas Petridis, Michael Terry, and Carrie J Cai. 2024. PromptInfuser: How Tightly Coupling AI and UI Design Impacts Designers’ Workflows. In Proceedings of the 2024 ACM Designing Interactive Systems Conference (DIS '24). ACM, New York, NY, USA, 743–756.

8Jason Wu, Yi-Hao Peng, Xin Yue Amanda Li, Amanda Swearngin, Jeffrey P Bigham, and Jeffrey Nichols. 2024. UIClip: A Data-driven Model for Assessing User Interface Design. In Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology (UIST '24). ACM, New York, NY, USA, Article 45, 1–16.